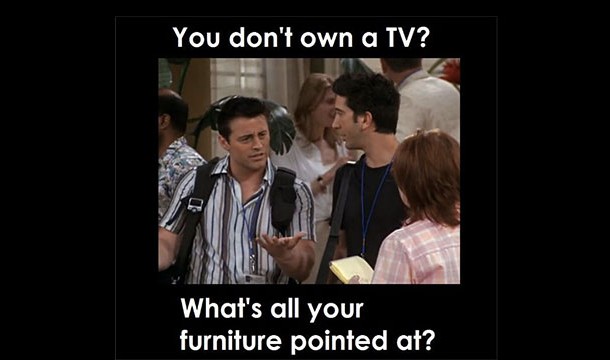

But let’s bring this closer to home. Even in the little things, like fashion, style, and dialect, Hollywood plays a role in shaping our own society as Americans. Now, this is many times not a bad thing. There are gazillions of things that Hollywood does in fact portray accurately about the nation. We drive big cars, have lots of space, people are friendly, the cities are huge. All true. But today we’re going to focus on the things that might not be so true. The bad part, of course, is that some people unfortunately believe these things to be true. So, whether you are American or not, these are 25 things people think are true because of Hollywood! Featured Image: pixabay Enjoying this list? Check out 25 Misconceptions Hollywood Has Taught Us.